We are more confirmed about the power of Artificial Intelligence (AI) to transform lives and businesses now. There are countless possible applications of AI and machine learning at present, and we see and hear exciting ways how these modern technologies are being used for value addition or for tasks deemed impossible with human intelligence. When we move to the anti-money laundering (AML) compliance space, the potential of AI is immense. Many banks have pilot projects ongoing with the multiple vendors after regulators including the US Financial Crime Enforcement Network (FinCEN) encouraged banks “to consider, evaluate, and, where appropriate, responsibly implement innovative approaches to meet their Bank Secrecy Act/anti-money laundering (BSA/AML) compliance obligations, in order to further strengthen the financial system against the illicit financial activity.” Increasing complexity of AML threats during the COVID-19 times, ever-increasing volumes of data to analyse, false alerts rising to unmanageable levels, ongoing reliance on manual processes and the ballooning cost of compliance are prompting many financial institutions to adopt modern technology and improve their risk profile.

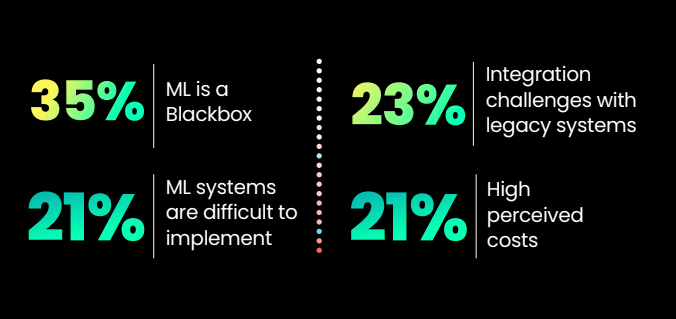

Many banks were able to develop scientifically sound machine learning algorithms that provide obvious effectiveness and efficiency improvements. However, most of these projects are finding it difficult to come out of the lab as deploying a machine learning model in production with real value addition is a harder task than what we expected. Many banks are stuck at the AI implementation stage where they come face-to-face with certain barriers unfathomed before. During a webinar, we asked our audience about the barriers that prevent their organization from adopting AI in AML compliance and we got the following result.

Survey: Factors Inhibiting AI Adoption in AML Programs

Crossing this ‘AI chasm’ is often difficult but not impossible. Here, we are trying to dive deep into certain myths that hinder AI implementation and bust them with relevant facts.

Myth 1: AI systems need massive volumes of data to be effective

Of course, data is at the heart of all machine learning models. However, it is the quality of data, rather than quantity, that decides a machine learning model’s use in the real world. For machine learning, the basic rule is ‘garbage in, garbage out’. There are ways to build effective and implementable machine-learning models with a minimal set of historical data. However, for algorithms to become smarter over time, they constantly require new data. These models should have the ability to collect, ingest and learn from incremental data and update themselves automatically at regular intervals.

Myth 2: AI is a ‘Black-box’; you give an input and you get an output

In general, the process of an AI algorithm producing an output from input data points by correlating specific data features is difficult for data scientists and users to interpret. Many renowned AI projects were abandoned due to this issue. The same problem is relevant in the banking industry as well. If regulators pose a question: how AI has reached at a conclusion with regard to a banking problem, banks should be able to explain the same. Such an audit is not possible with a ‘black box’ AI model. Most widely accepted model governance frameworks have model transparency as a key element for adoption. Research is ongoing in this area to make transparent models. For example, Tookitaki has created a framework and method to create explainable machine-learning models. The patent-pending ‘Glass-box’ approach helps create transparent AI models with interpretable predictions. It provides actionable insights to users, enabling them to make business-relevant decisions in a quicker manner.

Myth 3: AI systems are difficult to integrate into existing systems

In the machine learning lifecycle, the stage of integration into existing systems comes after exploratory data analysis, model selection and model evaluation. The ability of a machine learning model to integrate into upstream and downstream systems is crucial for its successful deployment in production. There are cutting-edge engineering techniques available to seamlessly integrate models into existing systems. For example, Tookitaki’s AI-enabled solutions come with pre-packed connectors for various data sources making them adaptable to various enterprise architectures and up-stream systems. Also, well-designed REST interfaces and detailed integration guides make it easier for downstream applications to consume the output from Machine Learning pipelines.

Myth 4: It is expensive to deploy AI-powered AML system in production

There are various factors that impact the cost of an AI-powered AML system. First of all, institutions can choose between in-house development and third-party software. From a cost perspective, third-party options fare better. Data format, data storage, data structure, processing speed and dashboard requirements are some other areas where firms can decide and optimize based on their needs. In order to save hugely on hardware, software and licenses, they can also opt for cloud and API-based models. In short, the cost of implementing AI depends largely on the customer’s requirements.

Myth 5: AI systems have longer ROI realization period

Business users often have concerns about the return on investment (ROI) of an AI system. Generalised and pre-packed AI models for AML compliance help financial institutions avoid starting from scratch. Assisted by the vendor’s expertise in the area and technology, these models can be implemented easily for faster time-to-value. They can be adapted quickly to existing AML compliance workflows and human resources can be allocated optimally to suit specific needs.

In order to overcome the barriers to AI implementation in AML programs, financial institutions should identify the areas where AI is needed the most. They can be transaction monitoring, names/sanctions/payments screening, customer risk scoring, etc. Once the areas are decided, the companies need to consider their integration options and deployment architecture. While selecting vendors, those providing transparent models and a robust model governance framework, where models are automatically updated amid incremental changes in data, should be given preference.

There are proven examples, such as that of Tookitaki, of putting cutting-edge machine learning research into production. Deploying AI-powered AML systems in production to improve operational efficiency and returns is just the beginning. There are also ways in which financial institutions with productised AI-based AML models can enhance their financial crime detection by leveraging collective intelligence. Join our virtual roundtable on ‘Federated Learning: Bringing together the industry’s AML intelligence’ to visualize the future where AML patterns (not customer data) are shared to stop the bad actors together.

Anti-Financial Crime Compliance with Tookitaki?